In the world of machine learning, data is the fuel that powers intelligent models. But not all data is created equal—especially when it comes to labelled data. High-quality labelled datasets are essential for supervised learning models, yet the process of labelling can be costly, time-consuming, and often requires domain expertise. This is where active learning steps in as a game-changing strategy, enabling models to achieve high performance while using only a fraction of labelled data compared to traditional methods.

Active learning is beneficial in fields like medical imaging, legal document classification, or any domain where acquiring labelled data is expensive or scarce. By allowing the algorithm to choose the most informative examples to be labelled, it significantly boosts efficiency. This concept is gaining popularity, and those looking to work on real-world AI challenges can explore it in depth through a Data Science Course that offers hands-on projects and exposure to advanced model training techniques.

What is Active Learning?

Active learning is a machine learning technique that allows the model to actively query or select the most valuable data points from a pool of unlabeled data. These selected instances are then sent to an oracle (usually a human expert) for labelling. The core idea is simple: rather than randomly labelling data, we can achieve better results by intelligently choosing what to label.

In traditional supervised learning, a model is trained on a large labelled dataset and tested on unseen data. However, labelling data can be resource-intensive. Active learning flips the script—it starts with a small labelled dataset and incrementally improves the model by selecting the most informative data points for labelling.

There are three main types of active learning:

- Pool-based Sampling: The algorithm selects examples from a large pool of unlabeled data based on a specific query strategy.

- Stream-based (Online) Sampling: Data arrives in a stream, and the algorithm decides whether to query the label for each example on the fly.

- Membership Query Synthesis: The model generates new data points (rather than choosing from a pool) to be labelled by an oracle.

Each of these approaches has its place depending on the problem, data availability, and computational resources.

Why Active Learning is Important?

In many real-world applications, you might not have the luxury of a massive labelled dataset. Consider scenarios like:

- Classifying medical X-rays, where labelling requires a radiologist’s expertise.

- Identifying defects in manufacturing via images, where each label may require a technician’s review.

- Legal document analysis is a process where only a trained lawyer can accurately label documents.

In such cases, labelling hundreds of thousands of examples isn’t feasible. Active learning can help reduce the annotation burden dramatically while still achieving robust model performance. This has made it a vital technique in both industry and academia.

Popular Query Strategies in Active Learning

One of the defining aspects of active learning is the query strategy—how the model decides which unlabeled examples to label next. Some widely used methods include:

- Uncertainty Sampling: The model selects data points where it is least confident in its prediction. For instance, if a classifier gives a 50-50 probability between two classes, it’s an ideal candidate for labelling.

- Query-by-Committee (QBC): A committee of models votes on the label of each example. The example with the most disagreement is chosen for labelling.

- Expected Model Change: Selects examples that would cause the most significant change in the model if labelled and added to the training data.

- Diversity Sampling: Ensures a diverse set of examples is chosen to avoid redundancy and ensure a wider learning experience for the model.

Each method has its strengths and weaknesses, and often, a hybrid approach can yield the best results.

Applications of Active Learning

Active learning has a wide range of applications, especially in domains where labelled data is expensive to acquire. Here are some notable areas:

- Healthcare: Labelling MRI or CT scan images can be extremely costly and time-consuming. Active learning helps radiologists focus only on the most informative cases.

- Finance: Detecting fraud from transaction data, where only a small subset is labelled correctly.

- Retail and E-commerce: Categorising products or analysing customer reviews where manual labelling isn’t scalable.

- Autonomous Driving: Labelling street objects in millions of images and videos becomes feasible with active learning.

- Natural Language Processing (NLP): In tasks like named entity recognition, where human annotation is expensive, active learning can significantly reduce costs.

A strong foundation in machine learning and hands-on experience with techniques like these are often covered in a Data Science Course, which prepares professionals to tackle such real-world challenges efficiently.

How to Implement Active Learning in Practice?

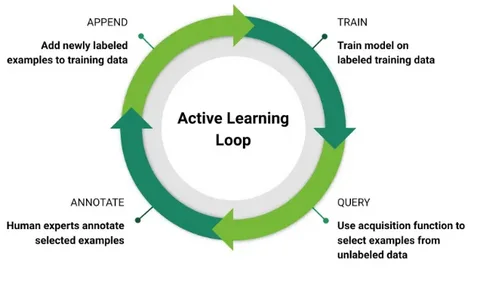

To implement active learning, you typically follow these steps:

- Start with a Small Labelled Dataset: Begin with a modest amount of labelled data to train a baseline model.

- Train an Initial Model: Use the labelled data to build a model.

- Select Query Strategy: Decide on an uncertainty or diversity-based method to identify which examples to label next.

- Query Oracle: Get the labels for selected data points from a human expert.

- Update Model: Retrain the model with the newly labelled data.

- Repeat: Iterate the process until you reach satisfactory performance or the labelling budget is exhausted.

Tools like modAL (a Python library for active learning), Label Studio, and frameworks like PyTorch and TensorFlow can be helpful for implementation.

By following this cyclical process, one can build highly accurate models with fewer labelled examples—making machine learning more cost-effective and efficient.

Benefits and Limitations

Benefits:

- Reduces labelling cost significantly.

- Improves model performance with fewer data points.

- Particularly valuable for imbalanced datasets.

- Allows targeted improvement in model weaknesses.

Limitations:

- Requires an oracle (usually a human) to label queried data.

- Implementation complexity can be high.

- Computationally expensive if the pool of data is large.

- Query strategy selection may vary depending on the task and dataset.

Despite its challenges, active learning offers a pragmatic solution when faced with limited labelled data, which is increasingly common in complex AI applications. It aligns well with the resource constraints faced by startups and researchers alike.

Professionals looking to explore cutting-edge approaches like active learning can benefit immensely from enrolling in a data scientist course in Hyderabad that dives deep into modern ML workflows and data annotation strategies.

Conclusion

As datasets grow larger but labelling becomes more expensive, active learning stands out as a practical and intelligent approach to improve model performance without incurring massive annotation costs. Enabling models to focus on the most informative examples allows for more innovative use of resources and faster development cycles. Whether in healthcare, finance, or autonomous systems, active learning is making its mark.

For those keen on mastering such transformative techniques, a data scientist course in Hyderabad can provide the right mix of theory, practical exposure, and mentorship to prepare for the data-driven future.

ExcelR – Data Science, Data Analytics and Business Analyst Course Training in Hyderabad

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 096321 56744